Ethics

The consensus from the national workshops held to develop this roadmap was that ethics deserved immediate attention. The World Commission on the Ethics of Scientific Knowledge and Technology (COMEST) released a report on robotics ethics in 2017 that acts as a good starting point [CRE17]. Many participants pondered the question of whether, just because we can develop new technologies in robotics, should we, and to what extent? Some examples given of the dilemmas facing the developers of new technologies include:

- On a fully automated farm, is it okay for robots to terminate livestock?

- In pest control, who decides which pests it is okay to control?

- In a fatal accident involving an autonomous vehicle, who is deemed responsible – the robot?

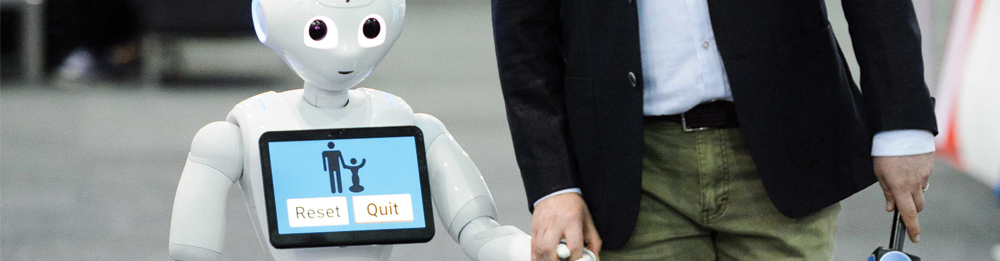

- In aged care, is it okay to have a robot perform duties that involve direct contact with a person such as massage?

A set of national guidelines or a charter that considers the policy implications are urgently required. These ethical considerations must be translated into guidelines, regulation and legislation, an area where government will play a crucial role, as acknowledged in an Australian Government consultation paper, released in September 2017 as part of the development of a new digital economy strategy [DE17]. The paper notes the need to “consider the social and ethical implications of our regulations relating to emerging technologies, such as AI and autonomous systems” – especially when they are involved in making decisions. Some of the broader ethical issues relating to autonomous vehicles were canvassed by the House of Representatives Standing Committee on Industry, Innovation, Science and Resources report on the ‘Social issues relating to land-based automated vehicles in Australia’ [PCA17].

As robots increasingly impact on daily lives, consideration must be given to how humans design, construct, use and treat robots, and other AI [NAT16]. These ethical considerations or “roboethics” [RE16] are human-centric. The term “machine ethics” is used to describe the behaviours of robots and whether they are considered artificial moral agents with their own rights.

Roboethics is concerned with developing tools and frameworks to promote and encourage the development of robotics for the advancement of human society and the individual, and to prevent its misuse. Using roboethics, the EU has devised a general ethical framework for robotics to protect humanity from robots [EU16], based on the following principles:

- Protecting humans from harm caused by robots

- Respecting the refusal of care given by a robot

- Protecting human liberty in the face of robots

- Protecting humanity against privacy breaches committed by a robot

- Managing personal data processed by robots

- Protecting humanity against the risk of manipulation by robots

- Avoiding the dissolution of social ties

- Equal access to progress in robotics

- Restricting human access to enhancement technologies.

Australia needs to consider its own set of roboethical principles, suitable for the Australian context, that can be used to guide in the development and application of new robotic technologies. It has been more than 10 years since South Korea announced plans to create the world’s first roboethics charter [NS07], to prevent the social ills that might arise from having inadequate legal measures in place to deal with robots in society. South Korea has the world’s highest population density of industrial robots, 631 robots per 10,000 people compared to Australia’s 84 robots per 10,000 people [IFRIR17]. The charter details the responsibilities of manufacturers, the rights and responsibilities of users, and the rights and responsibilities of robots. Some robot rights are written into Korean law, affording robots the following fundamental rights: to exist without fear of injury or death and to live an existence free from systematic abuse. In Korea it is illegal to deliberately damage or destroy a robot, or to treat a robot in a way which may be construed as deliberately and inordinately abusive.