Sensing: Robust Vision

The Robust Vision program is about “Sensing”, that is, creating robots that can see in all conditions. The key question we are addressing is, “How can innovations in existing computer vision, robotic vision techniques and vision sensing hardware enable robots to perform well under the wide range of challenging conditions they will encounter and how can we apply computer vision technology in the real world?”

To answer this, the Robust Vision program is developing robotic vision algorithms and novel vision hardware for robots to perceive the environment around them in a way that will allow them to act purposefully and perform their tasks, in a variety of conditions including high glare, fog, smoke or dust, rain, sleet, wind or heat.

The Robust Vision program is also working with innovative sensing hardware, developing it further to support robots operating under challenging viewing conditions such as poor light, or through partial obscuration and hyper-spectral cameras.

RV projects

RV1—ROBUST ROBOTIC VISUAL RECOGNITION FOR ADVERSE CONDITIONS

Research leader: Michael Milford (CI)

Research team: Hongdong Li (CI), Jonathan Roberts (CI), Chunhua Shen (CI), Niko Sünderhauf (CI), Abigail Allwood (NASA JPL), Nigel Boswell (Caterpillar), David Thompson (NASA JPL), Juxi Leitner (RF), Chuong Nguyen (RF), Sourav Garg (PhD), Sean McMahon (PhD), Medhani Menikdiwela (PhD), James Mount (PhD), James Sergeant (PhD)

Project aim: How we can use and innovate on existing computer vision and robotic vision techniques to make them perform well in the challenging conditions robots will encounter in real-world situations? To answer this question, the Robust robotic visual recognition for adverse conditions project is developing robust algorithms that solve the fundamental robotic tasks of place and object recognition under challenging environmental conditions, such as darkness, adverse atmospheric and weather conditions, seasonal change and translating them into real-world industrial applications.

5A kangaroo captured in darkness using a low light camera at the Centre’s Robotic Vision Summer School retreat in Kioloa, New South Wales.

RV2—NOVEL VISUAL SENSING AND HYBRID HARDWARE FOR ROBOTIC OPERATION IN ADVERSE CONDITIONS AND FOR DIFFICULT OBJECTS

Research leader: Chuong Nguyen (RF)

Research team: Peter Corke (CI), Robert Mahony (CI), Michael Milford (CI), Donald Dansereau (Research Affiliate), Adam Jacobson (PhD), Dan Richards (PhD), James Sergeant (PhD), Dorian Tsai (PhD)

Project aim: The key question being addressed in this project is “How can existing and new special vision hardware be exploited and developed in conjunction with new algorithm development to enable robotic vision systems to operate well under the wide range of challenging conditions that robots and applied computer vision technology encounter in the real world?”

To address this, the project advances the performance of robot vision algorithms using low-light cameras, rotational filters, hyperspectral cameras and thermal cameras to improve robot autonomy under all viewing conditions.

In developing new algorithms that exploit the particular advantages these innovative cameras offer, we enabled new performance benchmarks in tasks such as scene understanding, place recognition and object recognition. These hardware-software solutions can deal with challenges such as reflections, transparency and low light conditions and can also be applied to all Centre projects involving visual sensing, making their own systems’ performance under adverse conditions more robust.

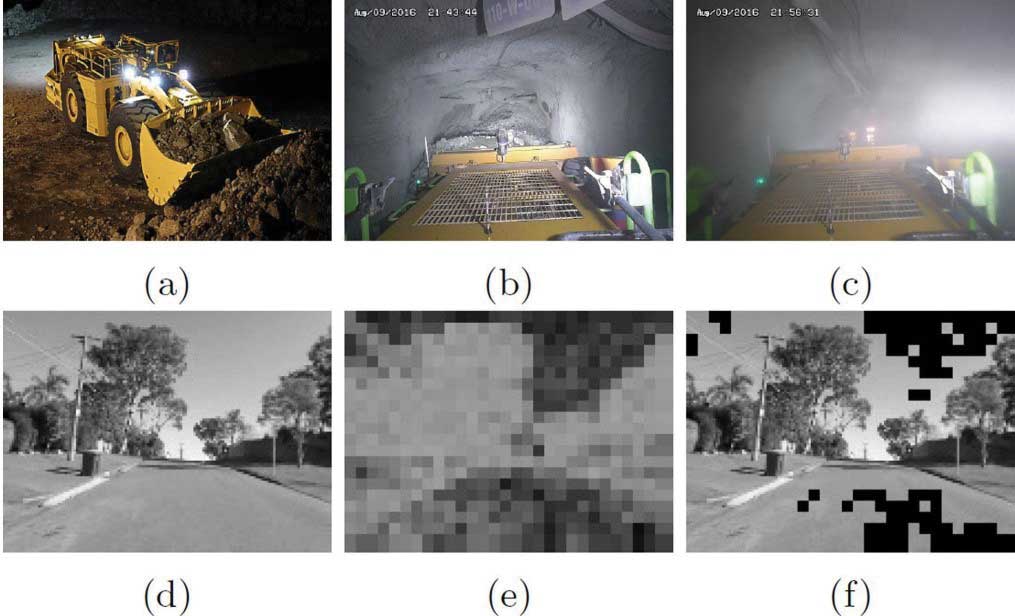

5The challenge of perception and navigation in underground and above ground environments.

5Visual surface-based positioning method for vehicles.

Key Results in 2017

The first stage of the robotic vision positioning system for underground mining vehicles in collaboration with Caterpillar, Mining3 and the Queensland Government was completed.

This project is part of an Advance Queensland Innovation Partnerships Grant and an associated publication “Enhancing Underground Visual Place Recognition with Shannon Entropy Saliency” was presented at the 2017 Australasian Conference on Robotics and Automation (ACRA) in December. Researchers CI Michael Milford and Associated RFs Adam Jacobson and Fan Zeng completed three sets of field trips across two different underground mining sites spread across Australia (mine site names withheld for confidentiality reasons).

Daniel Richards was confirmed as a PhD researcher in early 2017 and continued to work on low light vision research, including research trips to the Defence Science and Technology (DST) Group and work on developing custom low light hardware systems.

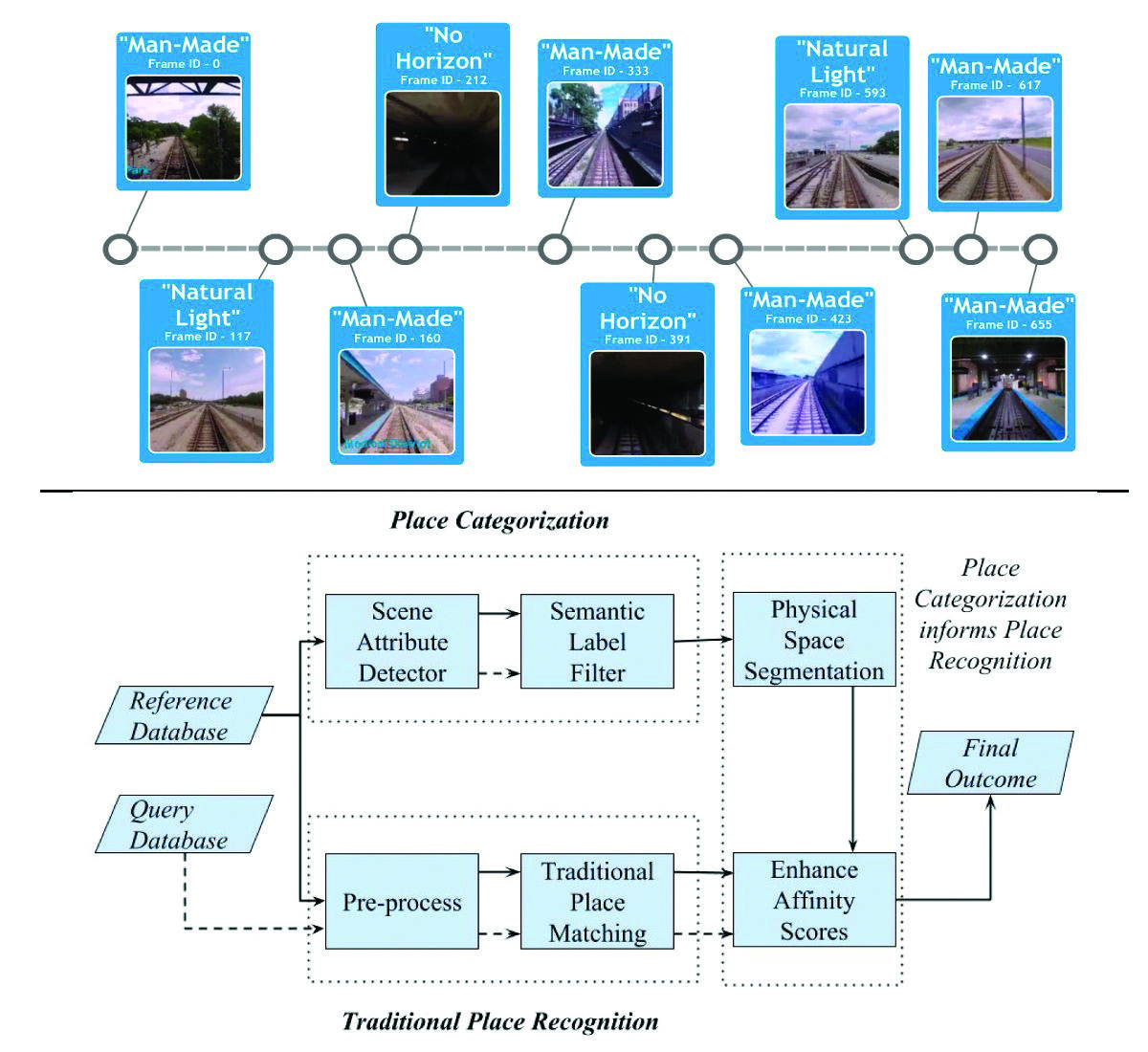

5 Combined semantic-traditional place recognition pipeline.

PhD researcher Sourav Garg’s paper “Improving Condition- and Environment-Invariant Place Recognition with Semantic Place Categorisation” co-authored with Associated RF Adam Jacobson, Swagat Kumar (Tata Consultancy) and CI Michael Milford was accepted and presented at IROS2017. Ordinary or specific place recognition involves reconciling current sensory input on a robot or mobile platform with previously gathered or stored maps, and recognising where you are within that map. Place categorisation on the other hand involves recognising the type of place – kitchen, supermarket, ocean, forest and so on. Following on from previous work in this area, in this work led by Sourav Garg and in collaboration with Swagat Kumar from Tata Consultancy, we leverage the powerful complementary nature of place recognition and place categorisation processes to create a new hybrid place recognition system that uses place context to inform place recognition.

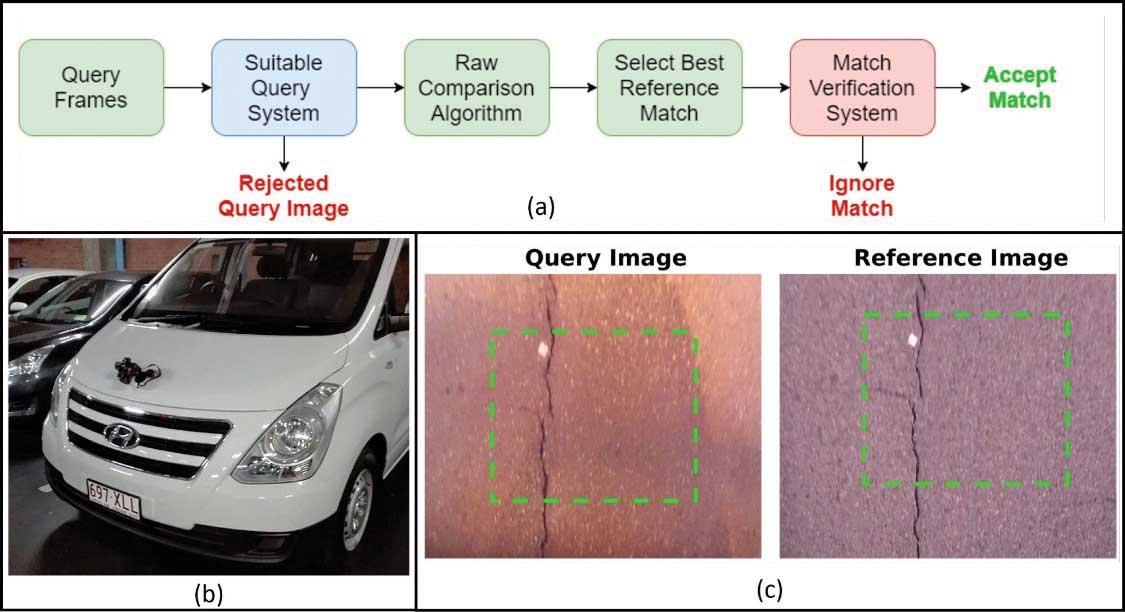

PhD Researcher James Mount also presented an accepted paper at ACRA 2017 titled “Image Rejection and Match Verification to Improve Surface-Based Localisation” co-authored with CI Michael Milford.

Positioning (or localisation) is a crucial capability for almost any vehicle or robot that moves. In this research, led by James, we developed techniques for localising with very high accuracy and low latency off the surface of the road using cameras, both during the day and at night. This sort of positioning system would complement (rather than completely replace) other positioning systems including GPS.

James also created and ran an all-day show titled “Self-Driving Cars – Making an Ethical Choice” at QUT’s Robotronica in August. The show ran hourly for a total of seven shows to over a thousand audience members. The premise of the show was simple, to show and discuss, with an actual miniature autonomous car, what the effects of various self-driving car ethical considerations are. To make the show interactive, the audience was able to vote live via their smartphone or tablet, on what decision they thought the robot should make. The day was a huge success and the researchers continue their efforts of engagement with industry, government and the public on the impacts and opportunities of self-driving cars and the artificial intelligence technology that drives them.

5 Full house for the show at QUT’s Robotronica, totalling more than 1000 people over the day

5CI Michael Milford presenting at the “Closing the Technology Loop on Self-Driving Cars” forum.

CI Michael Milford hosted a major self-driving car forum at QUT titled “Closing the Technology Loop on Self-Driving Cars” in June. More than 100 representatives from state and local government, industry and start-ups discussed and debated autonomous vehicles.